Hi All,

Currently I am running an Open-E system in testing mode as storage for the XenServer nodes. The current performance of the system bugs me a little, I am very curious to understand what are causing these non-optimal performance results. Or maybe the results are fine, I do not know and would like a second opinion.

The storage server setup

Dell R510

8 GB DDR3 1333 MHZ RAM

12x 300 GB SAS in RAID 10

PERC H700 controller, 1 gb cache, write back enabled

4x Intel PRO 1000 MBIT NIC

Network

HP Procurve 2510 as storage switch

Storage server is connected with 3x 1 gbit in LACP (802.3ad) trunk.

XenServer node is connected with 2x 1 gbit in LACP (802.3ad) trunk

Jumbo 9K frames enabled

From within a VM (4 cores / 4 GB RAM) in XenServer, I run the following dd command to execute a low-level data copy:

Which returns:Code:dd if=/dev/zero of=/var/tmpMnt bs=1024 count=12000000

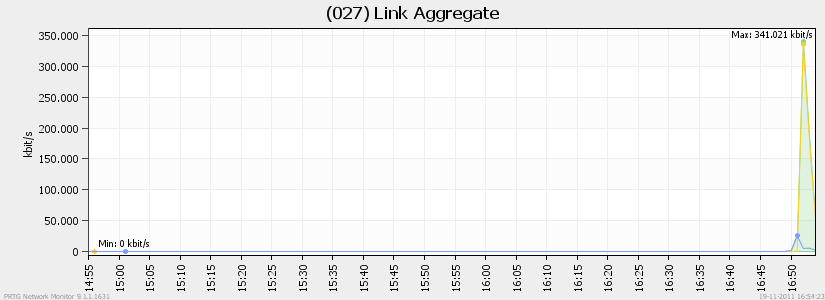

I suppose some of the speed can be cache on the raid controller. However, when looking on the switch via SNMP, the traffic that flows during the copy action.. 400 mbit (see below). It isn't even close to the 2 gbit that (looking from the nodes perspective) I should have available.Code:12000000+0 records in 12000000+0 records out 12288000000 bytes (12 GB) copied, 109.673 seconds, 112 MB/s

Is this a lack in performance, should I be able to achieve more? Is this a configuration fault?

Any tips / advise would be appreciated.

Reply With Quote

Reply With Quote